Sonic Cycles

October 2022

Python, Logic, Premiere, MaxMSP

This is a series of five interconnected sonification experiments. Each project was developed in one day as part of a five-day research and design sprint.

These prototypes alternately obscure and externalize the human cognition that goes into the creation of sound, the solving of Rubik's cubes, and the playing of chess.

There are many theories, structures, and ideas that go into the making of sounds. However, when these sounds are physically heard, the cognitive process of creation is flattened into a single sonic experience. Similarly, the physical movement of faces of a Rubik's cube omit the pattern recognition and problem-solving that go into finding a solution, and the physical movement of chess pieces around the board omit the strategy and future-thinking that go into playing chess.

During this week, I used different methods of creating sound to explore a cycle of sonification and reverse sonification with Rubik's Cubes and chess. I turned purely physical aspects of sound into Rubik's cube or chess positions, and then turned the cognitive processes of Rubik's cube-solving or chess-playing back into sound, using different methods of sonic composition. These latter translations emphasize the necessity of human cognitive input, and they cannot be automated. In contrast, the former translations deal with flattened sound, and thus are easily automated.

These experiments showed that to properly externalize cognition requires more than just sonification: the addition of spatial and visual elements would likely be more successful, especially with these particular cognitive processes - creating sound, solving Rubik's cubes, playing chess - that combine "pure" thought with physical manipulation.

Project 1: OP-1 Music

Goal: make a simple track with the OP-1 synthesizer (borrowed from Yoshe Li).

Project 2: OP-1 Music --> Rubik's Cube Scramble

Goal: use purely physical elements of the OP-1 music to generate a Rubik's cube scramble.

For info on Rubik's cube notation, see here.

Process

I used the six OP-1 audio tracks - Master L and R, plus four individual tracks - and mapped them to the six faces of the Rubik's Cube.

I then "binned" each wave, turning the data into a collection of points and lines. The binning process splits the data into equal timechunks, takes the average amplitude in that chunk as the y-value of the new point, and takes the time at which the original wave was closest to the average amplitude as the x-value of the new point. This allows for automated binning that also creates points with unique timestamps (x-values).

For each binned track, I converted each line segment into a clockwise or counterclockwise turn of the track's mapped face. Upwards lines (positive slope) correspond to clockwise turns, and downwards lines (negative slopes) correspond to counterclockwise turns. Each turn had a timestamp according to the line's x-axis position.

Finally, all movements for all faces were consolidated and ordered by timestamps to create the final scramble.

Project 2 code: https://github.com/ryurongliu/sonic-cycles-project-2

Project 3: Rubiks' Cube Solve --> Instrumental Music

Goal: use the cognitive process of solving a Rubik's cube, mapped to the cognitive process of instrumental composition, to create a short instrumental track.

Process

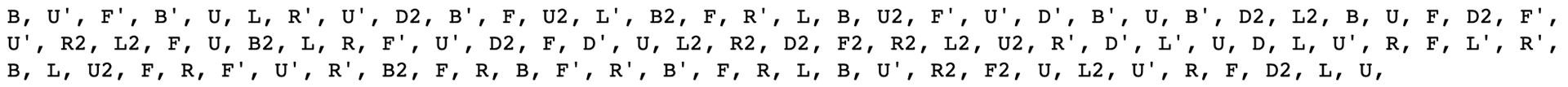

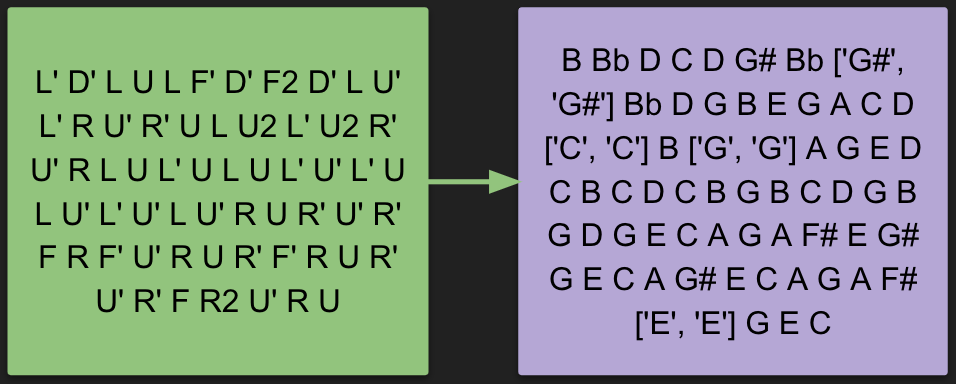

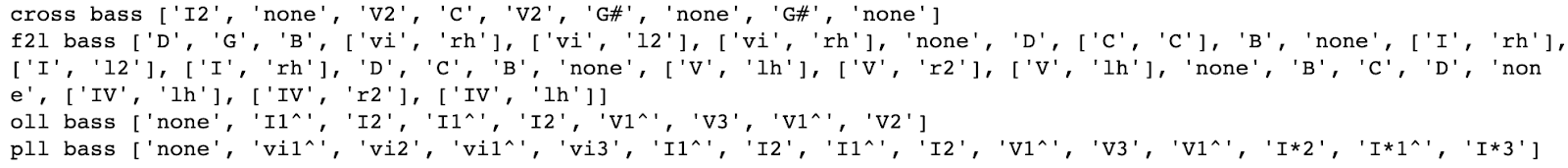

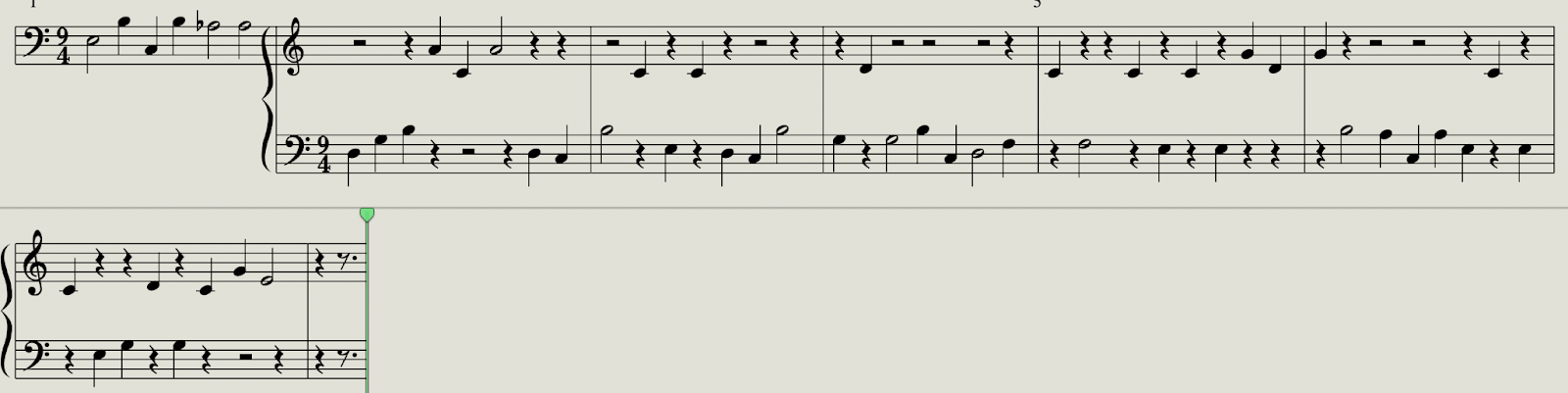

I began by finding a solve and notating its moves.

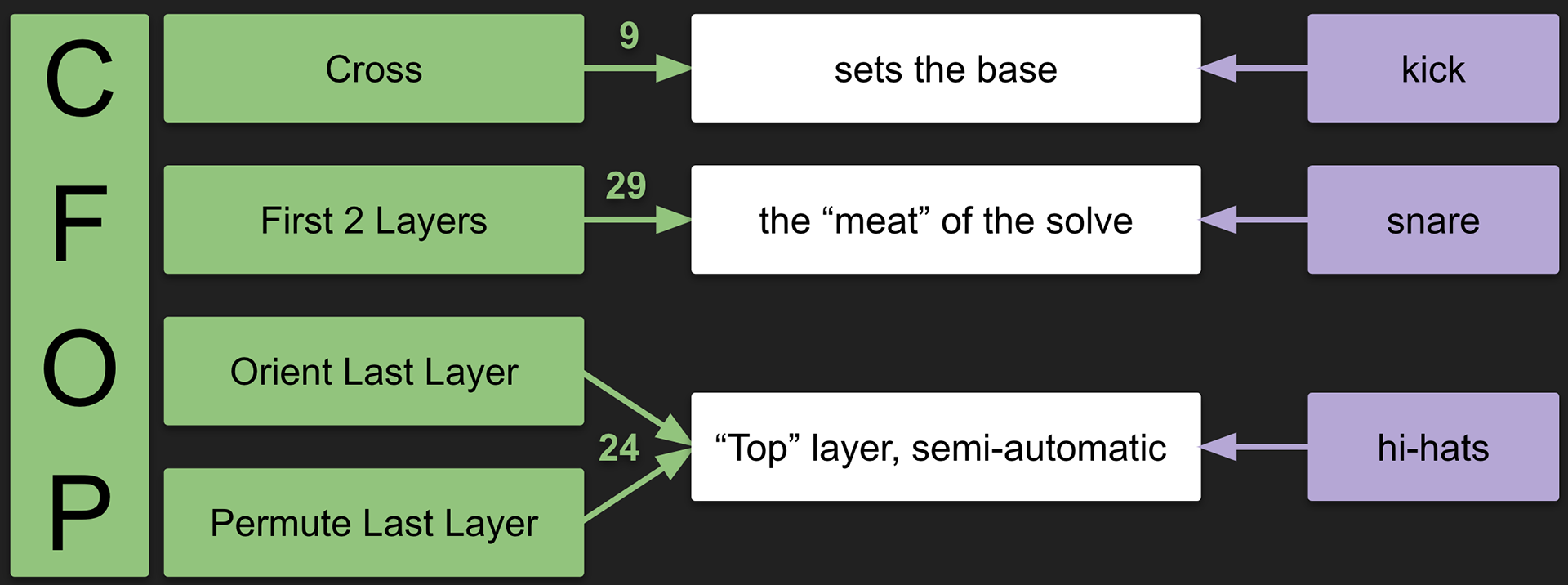

Then, using the four basic instrumental components of drums, bass, chords, and melody, I related each components' musical function to aspects of a Rubik's cube solve that serve a similar function.

Drums

I mapped each step of my solving method to particular parts of the drumset, according to their function. Then, I used the number of moves in each step of my particular solution to create a polyrhythm of those mapped drum pieces.

Melody

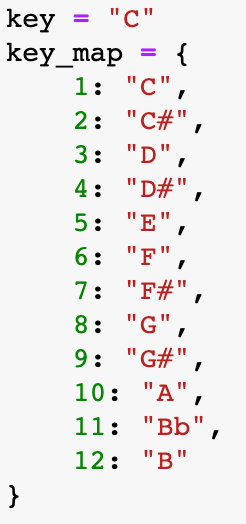

I mapped each possible move to each note in the 12-tone scale, based on the usefulness of each move in a solve and the usefulness of each note in my chosen key & mode of C Major. I then used this mapping to translate each move of the solve into melody notes, playing one note per beat and mapping double moves to eight notes.

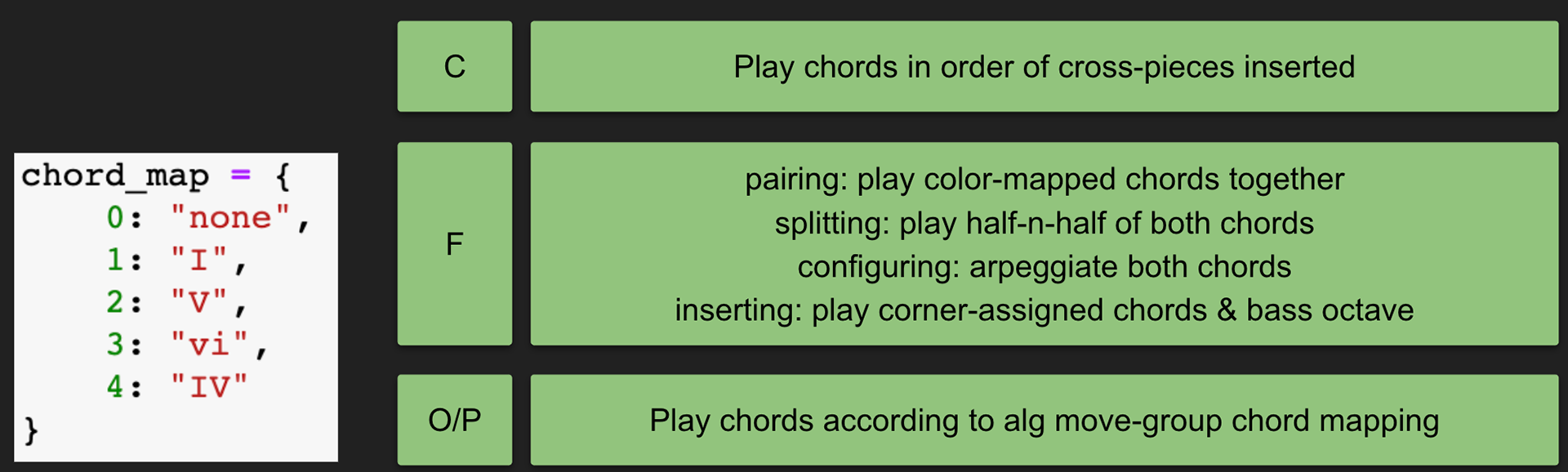

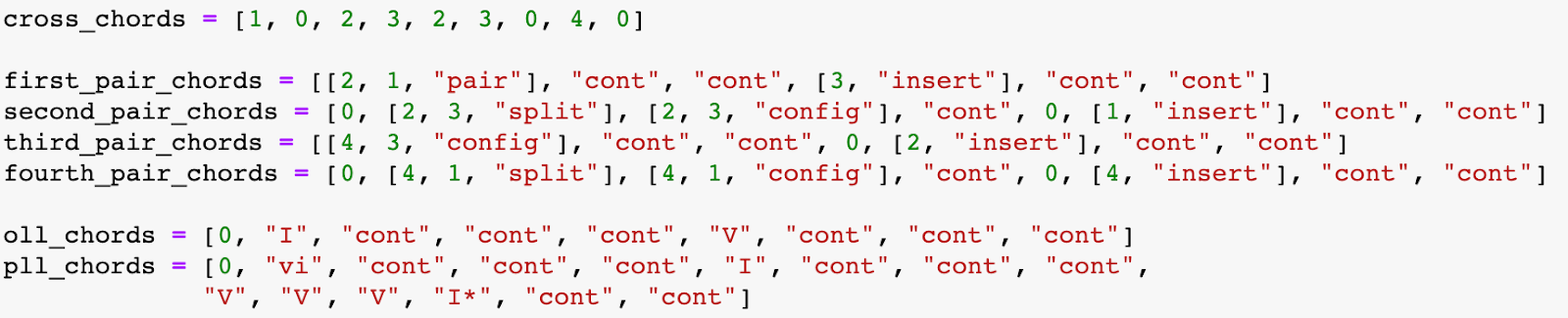

Chords

I picked four chords in C Major to use throughout the piece. I generated a sequence of chords and chord voicings by mapping the purpose of each move to one of these four chords. Since move purposes are different for different steps of the solve, I created a separating mapping for each step.

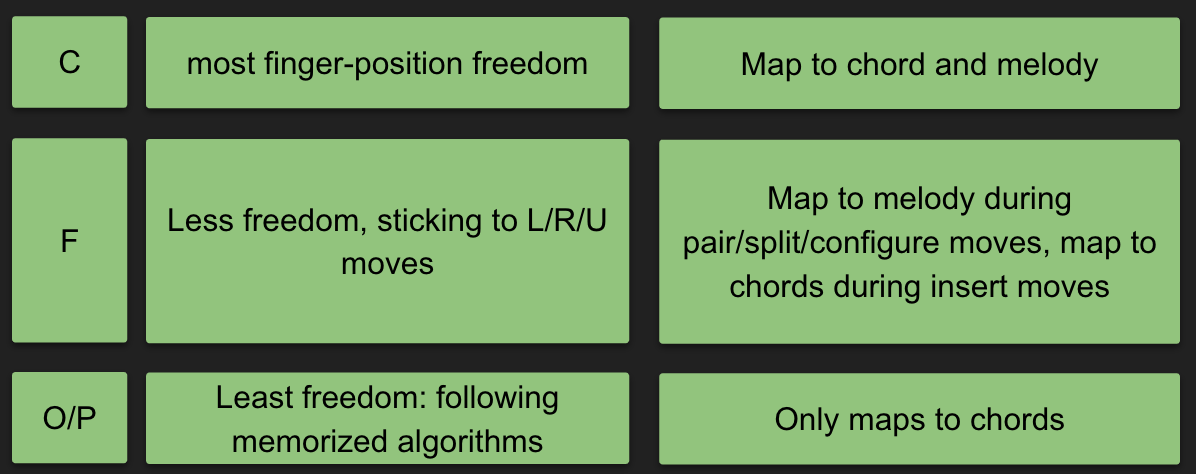

Bass

I mapped the fingers I used for each move to bass notes, using notes either from the accompanying chord or the accompanying melody note. Similar to the chords, I created a different mapping system for each step of the solve, since the reasoning for which fingers you choose to use changes in each step.

Logic

Finally, I used Logic's virtual instruments to input the composition as midi and produce the sound file.

Project 4: Instrumental Music --> Chess Midgame

Goal: use purely physical elements of the instrumental music to generate chess moves to a midgame position.

Process

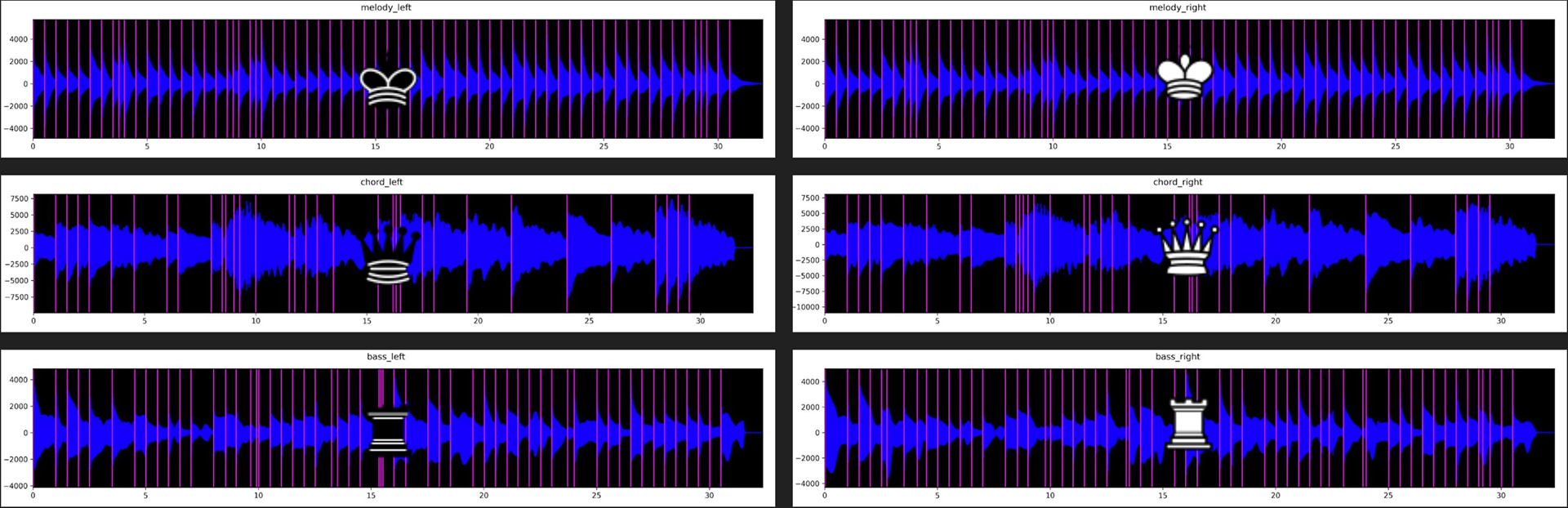

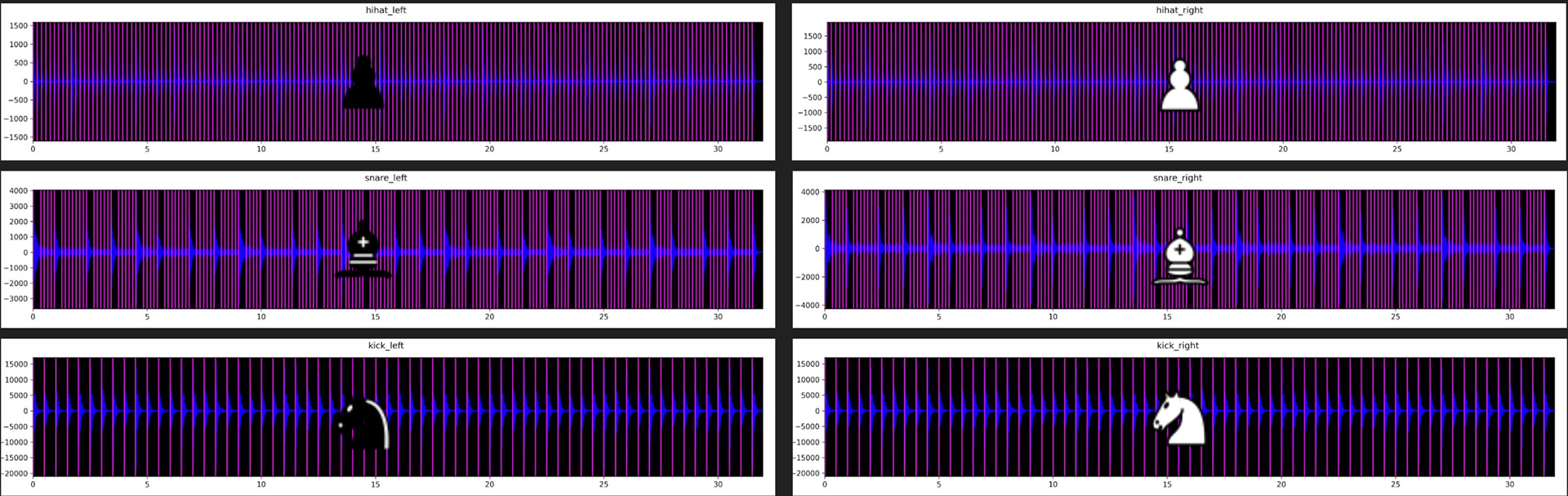

I mapped each instrumental track to a chess piece, using left channels for black and right channels for white.

I then used the Essentia python package to find the audio onsets of each track.

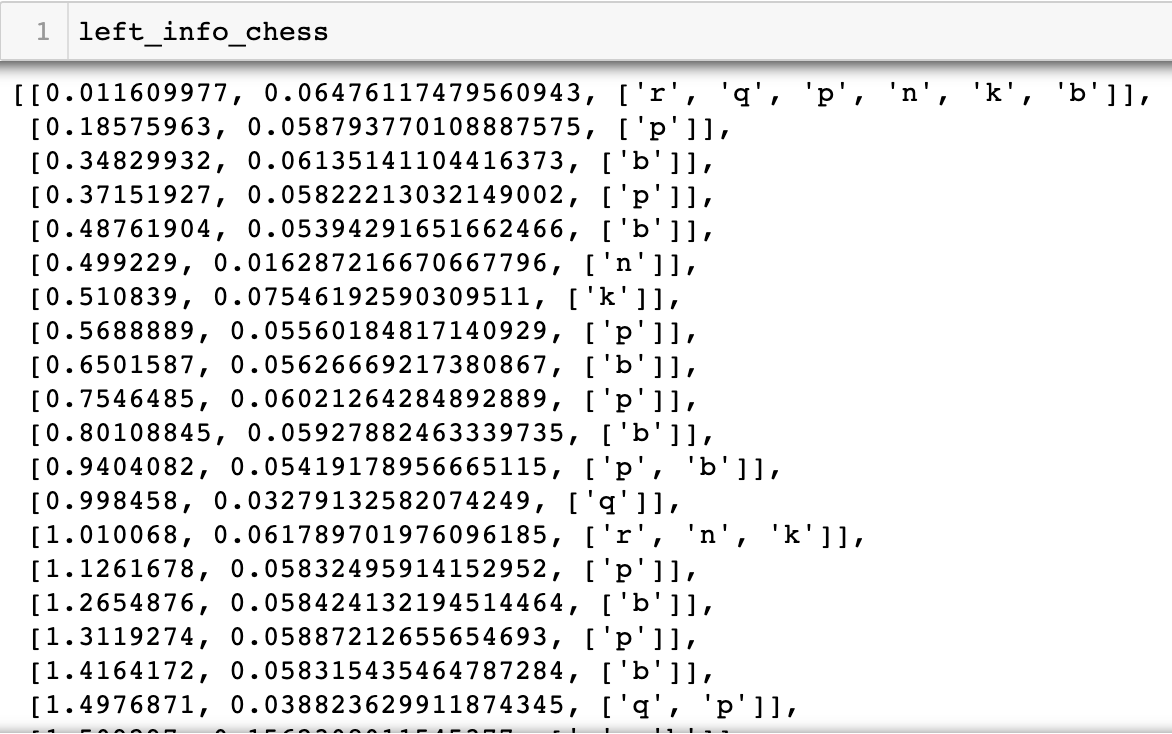

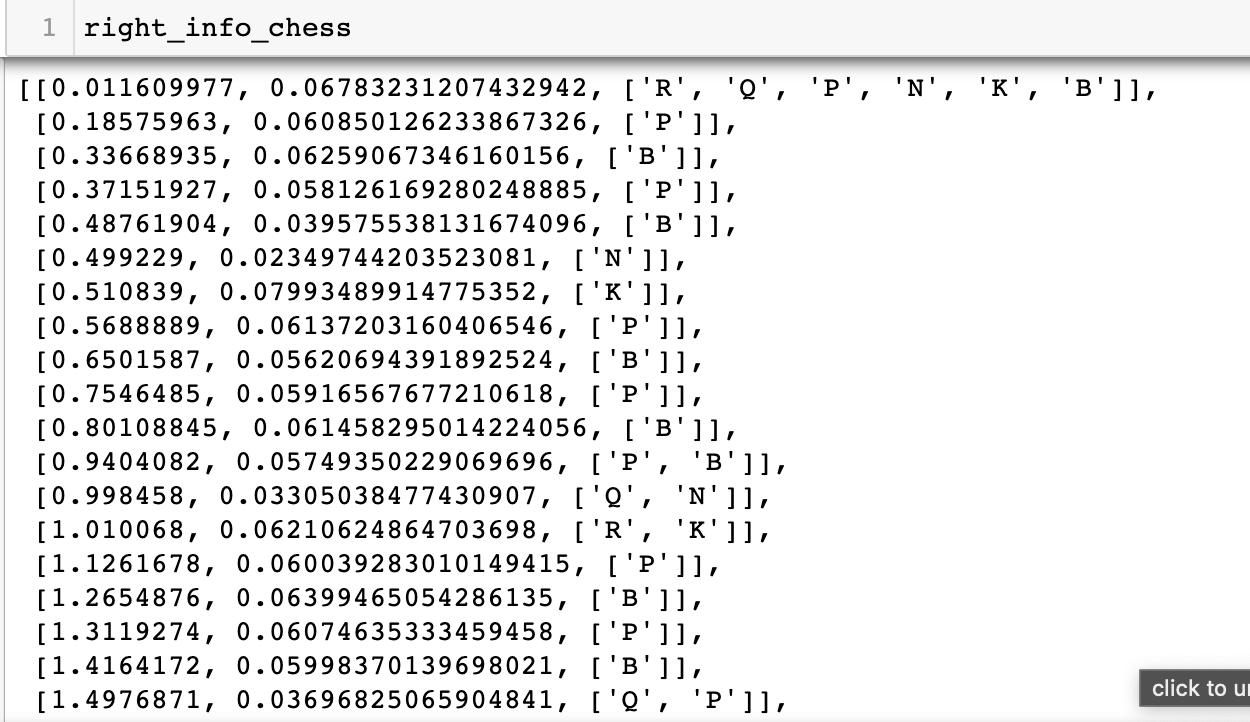

I then used the Essentia python package to find the audio onsets of each track. For each offset, I created a datum consisting of the offset time, the average amplitude of the master track at that time, and the chess piece corresponding to that track. I consolidated the offsets by ordering them by timestamp and combining offsets that occurred at the same time.

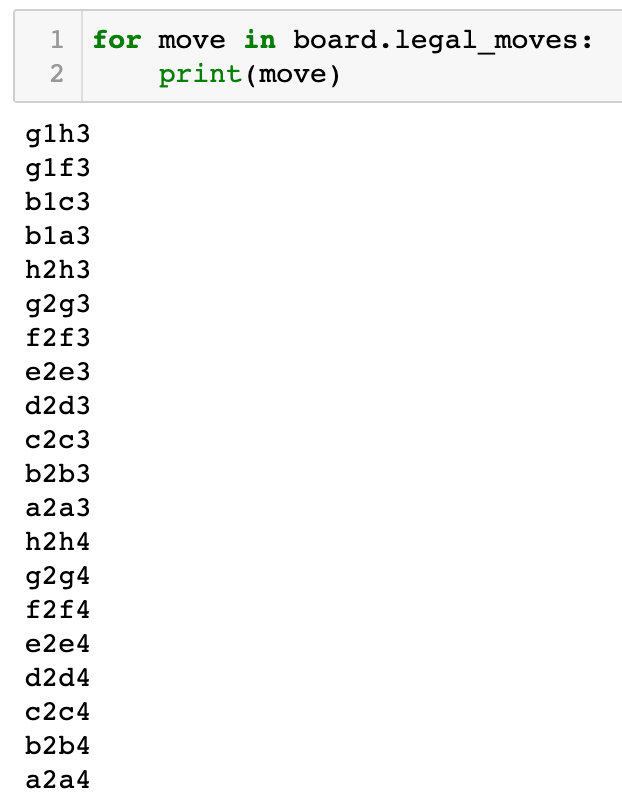

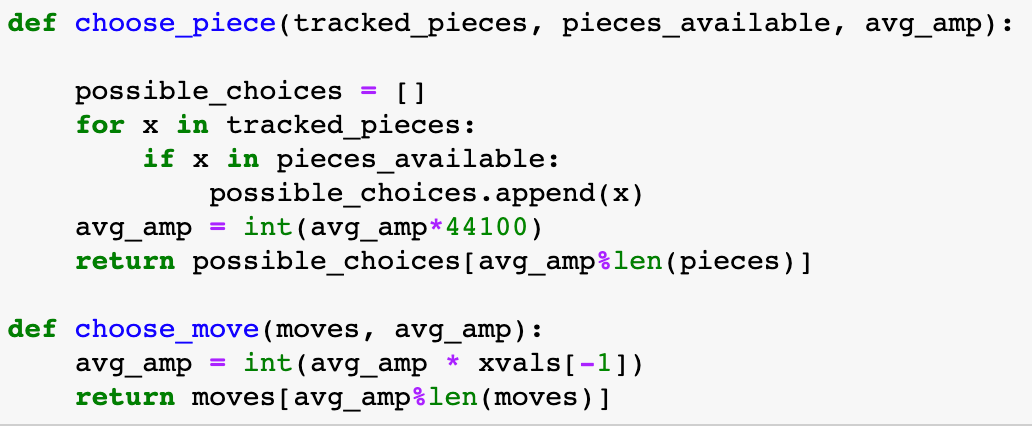

I then used the Essentia python package to find the audio onsets of each track. For each offset, I created a datum consisting of the offset time, the average amplitude of the master track at that time, and the chess piece corresponding to that track. I To pick each move, I first compiled the list of all possible legal moves. Then, I selected the next offset datum whose pieces had a possible move. Finally, I used the average amplitude of the master track to choose which piece to move, and which possible move to take.

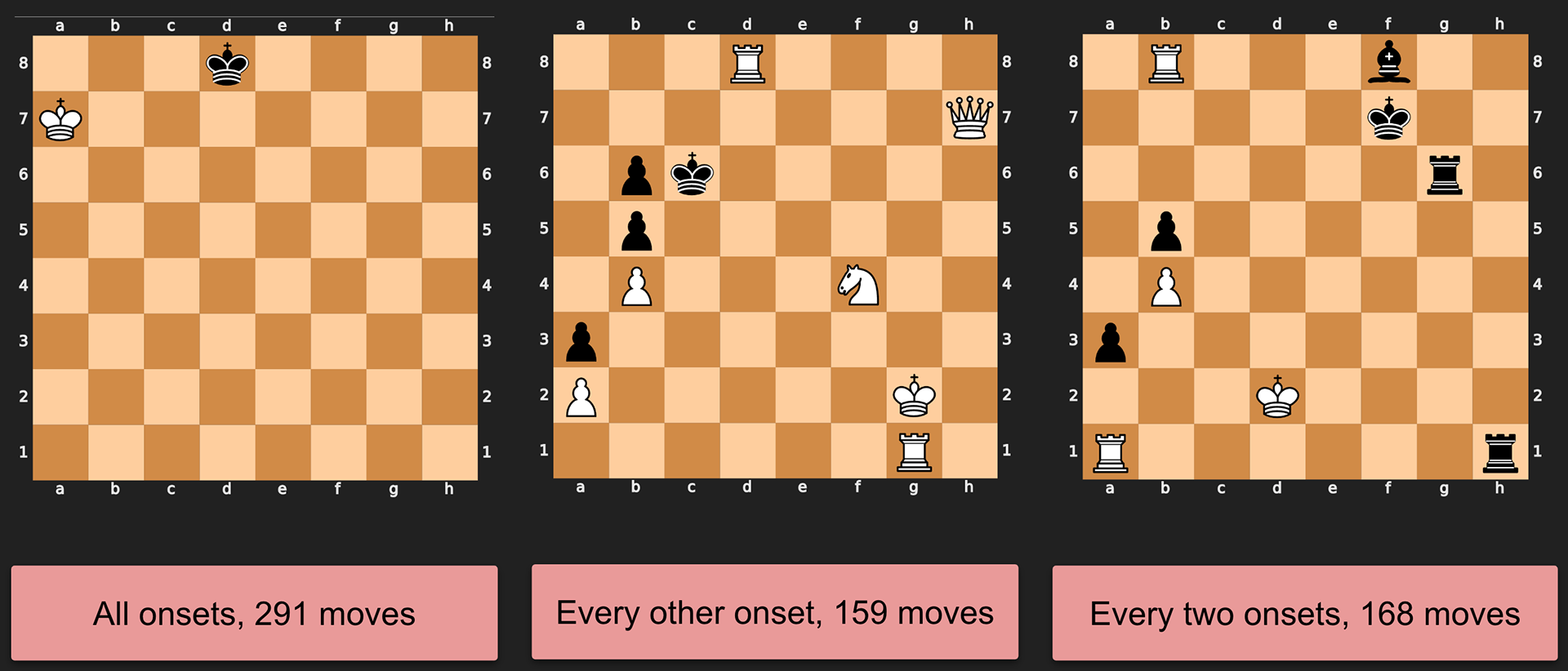

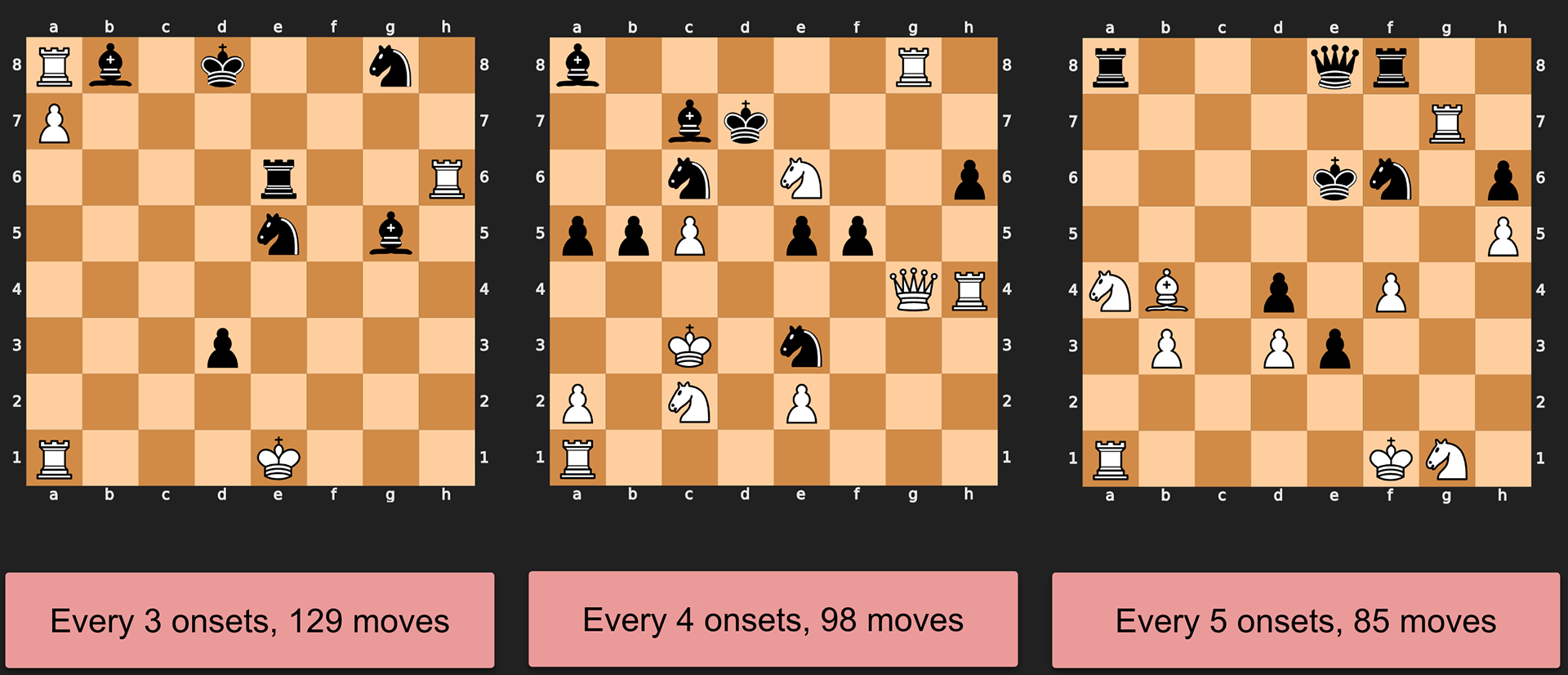

I started by using ALL offset data to generate moves. However, this generated 291 moves that ended in a complete draw. I experimented with skipping data points until I found a good mid-game position, using every five data points. (I did try playing the game generated using every four onsets, but it ended extremely quickly.)

Project 5: Chess Playthrough --> DSP Music with MaxMSP

Goal: Use the cognitive process of playing chess, mapped to the cognitive process of creating DSP sounds, to generate synthesized sounds.

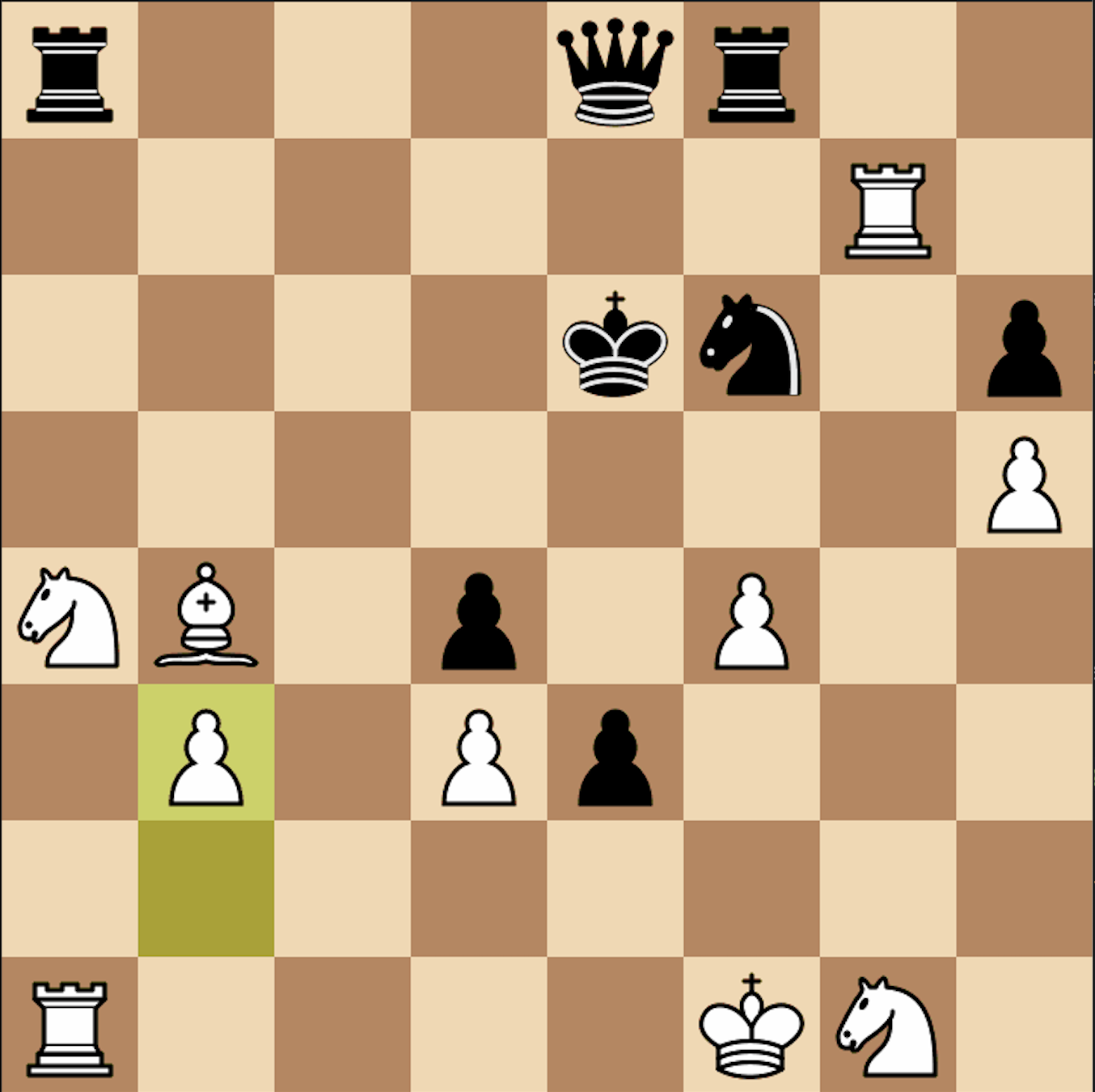

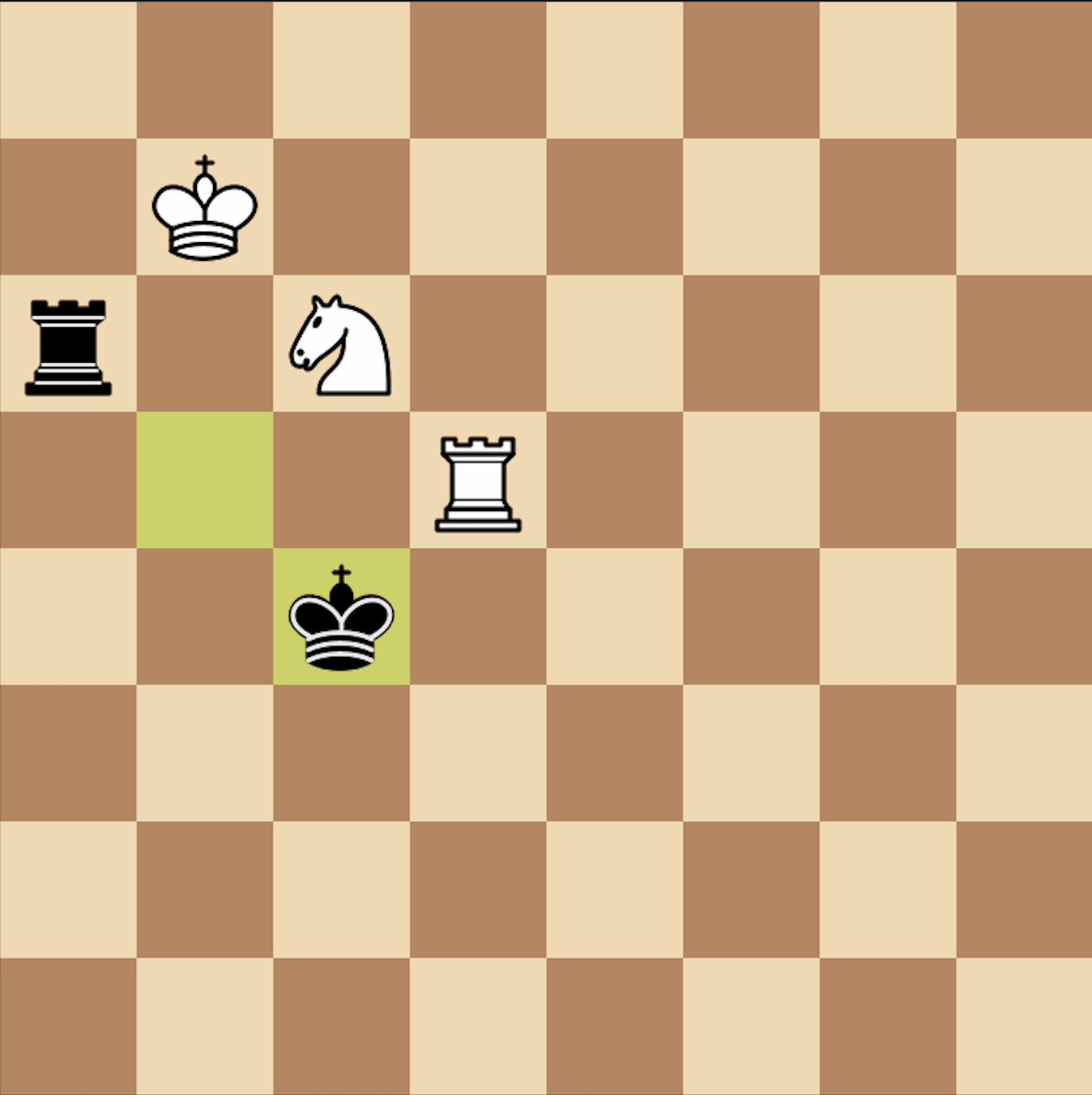

For this day, I worked through the ideation process for an audiovisual installation that externalizes the cognitive process of playing chess. I started by finishing the previous day's generated game with my friend, Iris (shown above).

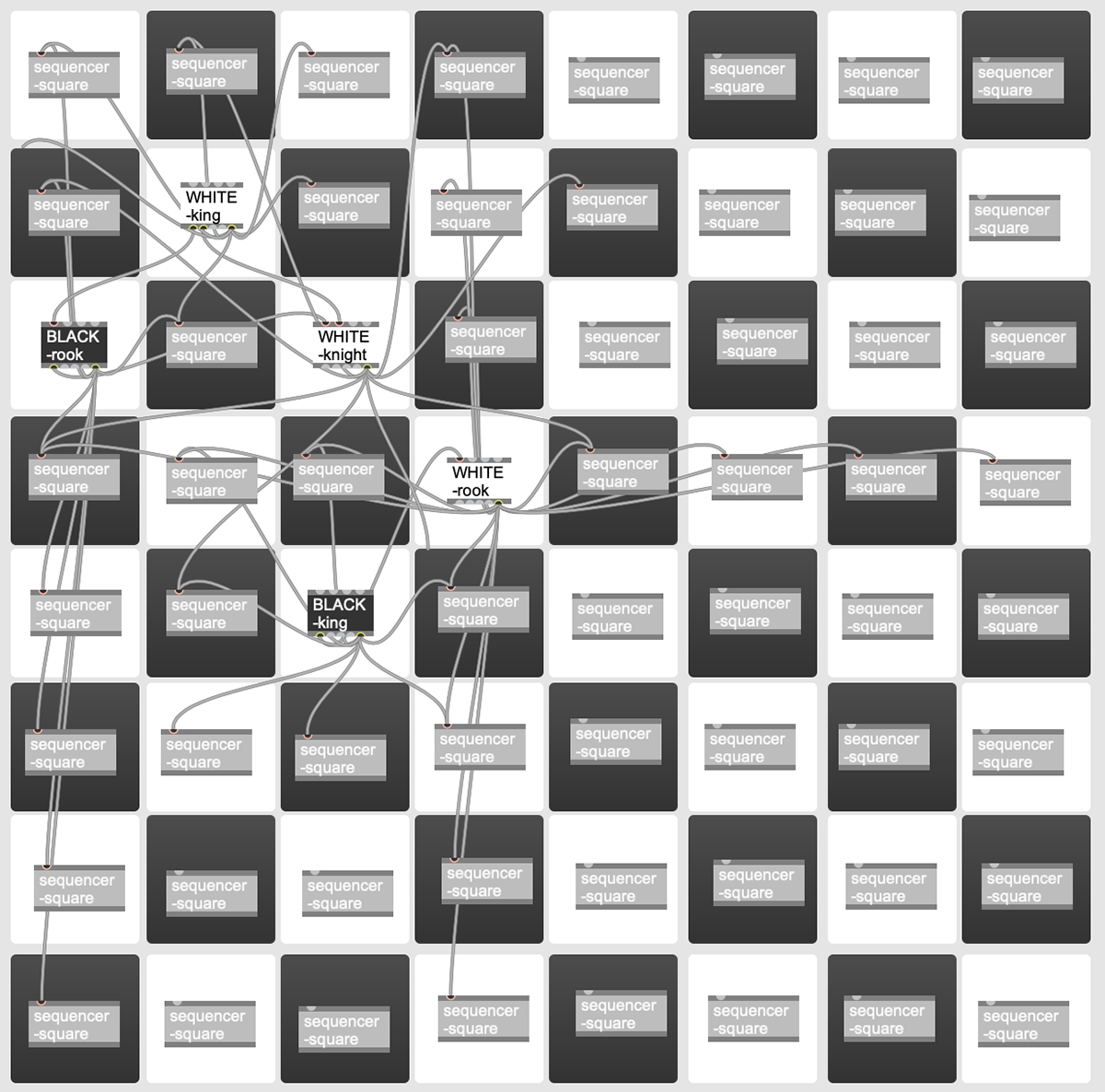

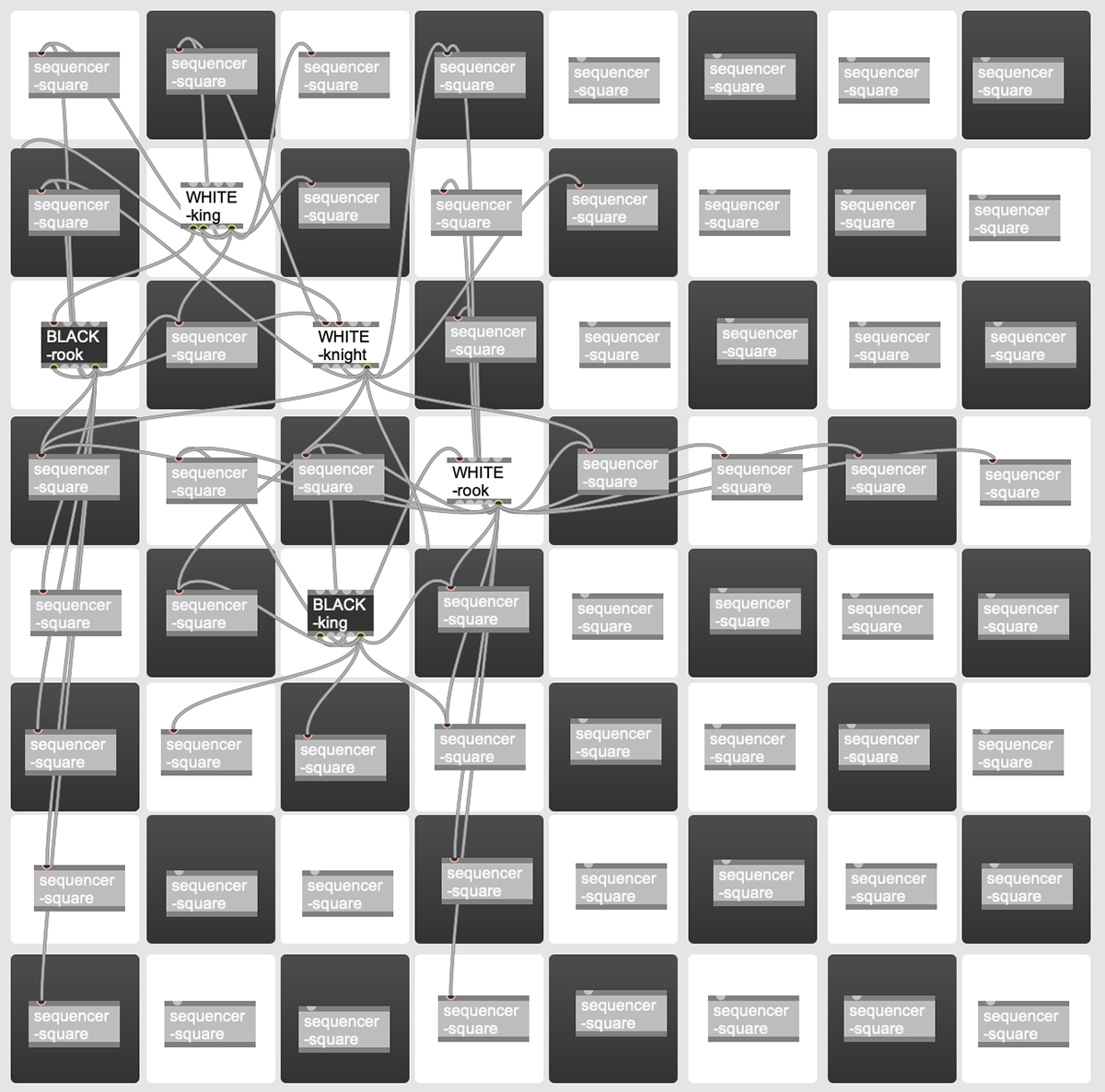

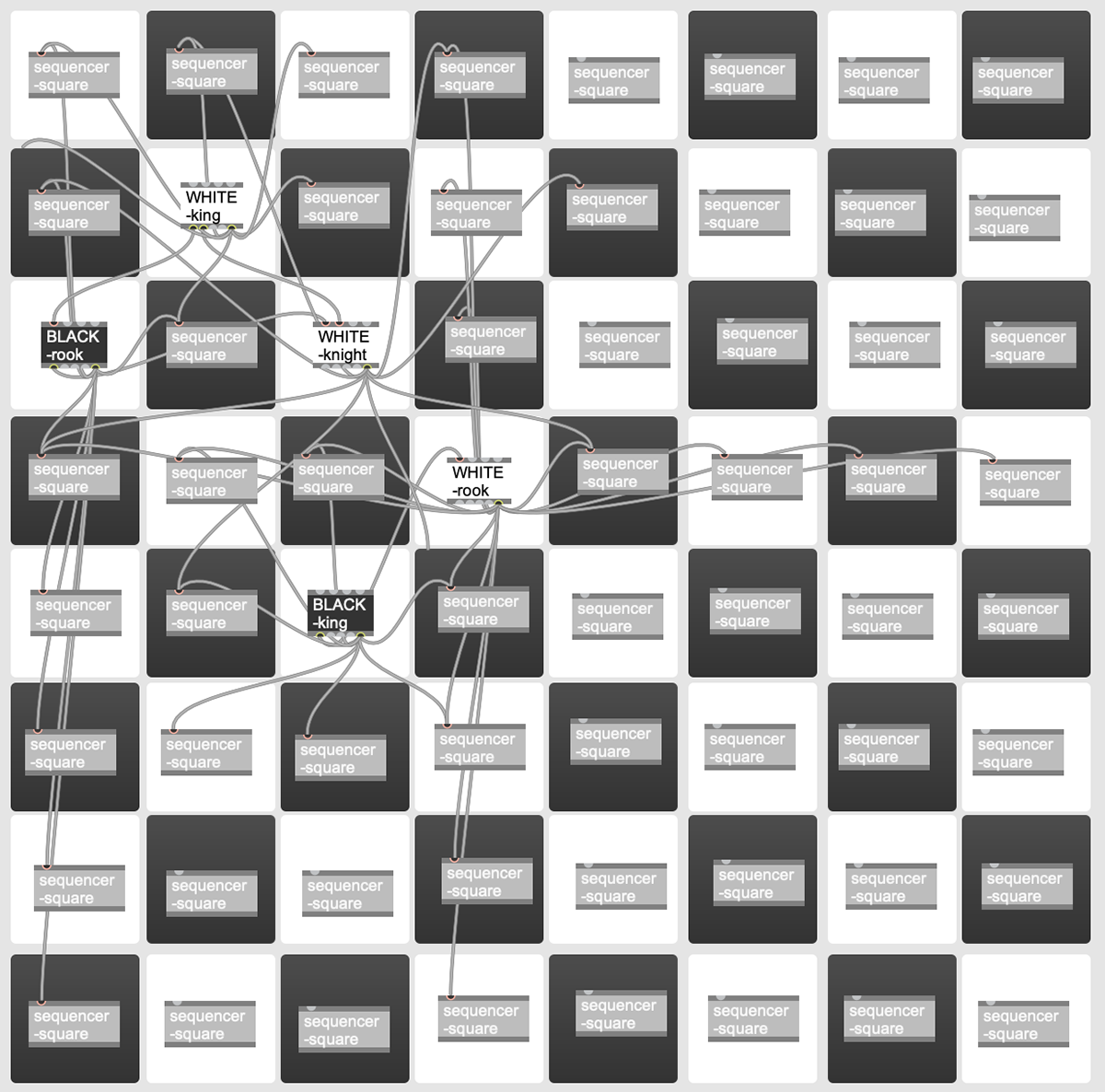

I then decided to use the relationships between pieces as my input data for sonification. These relationships of attacking, protecting, pinning, and checking can be visualized as a directed graph with chess pieces as nodes.

This maps perfectly to MaxMSP, where objects and their patchcords can also be conceptualized as a directed graph with objects as nodes. Thus, I would assign each chess piece to a unique corresponding synth, with inputs and outputs available for expressing the relationships of attacking, protecting, pinning, and checking. The connections between pieces would modulate each synth's output signal, as in modular synthesis.

Thus the piece-to-piece relationships would shape the sounds associated with each piece. To sequence these sounds, I would use each piece's possible squares of movement, with all empty squares as potential sequencers.

Each unique board state therefore corresponds to a unique sequence of uniquely shaped sounds. Below is an example of how the MaxMSP object connections would correspond to a particular board state.

Finally, to show the thoughts occurring between board states, MaxMSP patches corresponding to "considered" states of the board would pop up briefly and then exit.

I also considered a range of other installation possibilities, including:

• 64 speakers arranged in a grid, with each speaker playing sounds corresponding to the piece on that square

• a physical chessboard, capable of communicating with the computer for instantaneous audiovisualization

• starting off with "considered" states supplied by a chess engine, and then slowly using machine learning to "learn" the play style of participants and reflect that style in the "considered" states

• playing different "genres" of sound for black vs. white

• using projection mapping to project the MaxMSP chessboard onto the speaker grid, to see the changing patchcords